# Understanding Emotion Analytics

This section describes TAWNY's take on emotion analytics in more detail. There are many misconceptions and false understandings of how emotions can be measured automatically and oftentimes, important nuances get lost in short marketing messages. For us, it is very important that our clients understand what Emotion AI techniques can offer and what's just superficial marketing lingo. If used correctly, Emotion AI techniques are a powerful addition to your toolbox to improve user experiences and to make better decisions.

We start by stating the obvious: Human emotions are a very complex topic. Most human beings in general are able to send and receive information on the emotional layer intuitively, but even for us humans, it is very difficult to rationally assess human (emotional) behavior.

Nevertheless, centuries of research from many different fields such as psychology and biology as well as sociology and economics have shed some light on what human emotions are, how they are influenced, what they do influence and in what ways they are expressed. There's no doubt that emotions play a fundamental role in social life, how we consume or how we make decisions. Thus, if we were able to somehow assess a human's emotional state or emotional reaction in a certain situation or towards a certain thing, we potentially could draw many interesting conclusions.

One important component of understanding human (emotional) behavior is to actually recognize emotions or emotional reactions. As said before, despite most humans' intuitive usage of emotional clues, we have a hard time formalizing emotion assessments. The question is: How can we actually measure human emotions?

# Measuring emotions

"Unfortunately", there is no interface on the human body which we could plug a cable into and get a real-time stream of that person's actual emotions. So there's only two things we can do to find out about a person's emotional state:

- We can ask the person

- or we can observe the person and try to look for clues which hints us at what that person might actually feel.

Asking the person is a classic technique from psychology and adjacent fields like consumer and behavior research. It can be in the form of interviews or self-assessment questionnaires and all of these techniques have their inherent drawbacks – which you probably already know because you seem to be looking for another approach.

Option 2 tries to avoid all kinds of self-assessment with their obvious biases and resorts to simply observing the person. "Observing" in this case can be many different things, from a human expert actually looking at the subject of interest to measuring various kinds of signals like brain waves, etc. No matter what we are dealing with exactly, we always look for proxies for the actual inner emotional state of the person. Again, these proxies are the centerpiece of vast amounts of research, trying to describe patterns which can be observed on the outside, which allow us to infer the affective state on the inside.

One obvious proxy for a person's emotions is the human face. Besides serving as the entrypoint into the human body for many things which are necessary to survive (oxygen, food), it is our main tool for communicating – both verbally and non-verbally. Researchers such as the well-known Paul Ekman have put lots of effort into formalizing how humans are able to express emotions through different facial configurations. If we can recognize various facial expressions, we have a first tool to get some clues about a person's inner state.

Facial expressions

(photo by Andrea Piacquadio (opens new window) from Pexels (opens new window))

It is important to repeat that we are only dealing with a proxy. When we see a happy facial expression (i.e., a smile), we cannot necessarily infer that that person actually is happy. But we have observed a very distinct emotional (re)action which can convey a lot of information, especially if we relate it to the context in which it occurred. So although we might not be able to pinpoint an exact emotional state, we have gotten a very powerful tool to assess how a person experiences a certain situation or a certain stimulus.

# Context matters

Facial expression without context

(photo by Vlad Chețan (opens new window) from Pexels (opens new window))

Most of the proxies for emotions we can observe from the human body are rather hard to categorize on their own. If we look at the above picture, it is hard to decide whether that person expresses happiness, surprise or rather anger. Zooming out a bit like in the next picture, gives us a lot more information. The same person with the same expression, raising his hand in a victorious gesture and jumping high in the sky immediately leads to an impression of positiveness, high energy and cheerfulness.

Facial expression with context

(photo by Vlad Chețan (opens new window) from Pexels (opens new window))

Besides the interpretation of what a certain expression actually means in a certain context, the context also influences what we consider good, desirable states of a person compared to states we'd like to avoid. To give you two examples:

- When you are building a new application and test the user experience of this app, you probably want to identify (and consequently avoid) moments in the user journey which lead to negative feelings like anger and frustration.

- However, when you are creating a new movie, you might actually want to take the viewer on an emotional rollercoaster ride by alternating between negative feelings like sadness and positive feelings like happiness.

So as said before, when you start out with a new project involving emotion analytics, you should clarify both how to interpret certain patterns in the proxy and which emotional reactions you actually deem desirable. For typical applications of the TAWNY platform like market research cases or UX testing, we can help you with the desired emotional reactions and interpretation. If your use case goes beyond these better-known applications, you might need to put some more work into initially answering these questions, but afterwards, it will allow you to get great insights quickly and automatically.

As a final note: When simply talking about emotions, one might tend to imagine longer-lasting, ongoing states of a person, like a general feeling of sadness. However, most applications of emotion analytics techniques are directed towards rather short-term, even event-like emotional reactions. And this most of the time actually is what you are interested in when doing studies for market research, UX testing, etc.

# Automatic facial expression recognition

So far, we've described the idea of observing proxies for a person's inner state to draw conclusions about how they (emotionally) perceive a certain situation or stimulus and we've highlighted the human face as a prime candidate for such a proxy. Using these insights, we could start applying emotion analytics right away, simply by doing it manually. That also is exactly what you have already done - maybe without thinking about it - for example when you conducted user interviews to test a new product. Maybe you have even formalized this method by taking a video recording of a test user's first experience with the product and analyzed the user's behavior afterwards by watching the video. In all these cases, you probably also took into account the emotional reactions of the user - either consciously or subconsciously.

Manually analyzing the emotional reactions of the user has many drawbacks:

- It is time-consuming

- You might need to review hours of videos to identify only a few situations with interesting emotional reactions

- Splitting the work amongst several people might lead to inconsistent assessments because of different interpretations of the different persons

- Scaling such studies to 10s or 100s of participants is unfeasible

These are some of the problems Emotion AI techniques like ours can mitigate. By automatizing the analysis of emotional reactions, you get all the benefits without the aforementioned drawbacks.

So how can we actually automatize emotion analytics? That is one of the major questions of the research field called Affective Computing and also one of the topics which has seen tremendous advancements in the last decade. One of the core techniques is automatic facial expression recognition. As we have already seen, facial expressions are a very good proxy for a person's actual inner state, so if we can recognize these expressions automatically, a lot of the necessary work is done.

The good news is that through the enormous progress in areas such as Machine Learning and especially Deep Learning, computers have become much better at analyzing images and videos, which is exactly what we need to recognize facial expressions. Put simply: By feeding a computer with large quantities of example images which have been annotated by human experts, we can create so-called Deep Neural Networks which can recognize different facial expressions automatically. That is one of the techniques we use at TAWNY to create the Emotion AI modules that power the TAWNY Platform.

# Emotion categories

Up until now, we have treated the term facial expression rather superficially. While everyone probably has an intuitive idea about different kinds of facial expressions, this topic also has been studied extensively by various researchers. They have come up with essentially three different ways to describe facial expressions:

- By defining categories which describe the usual message these expressions try to convey (e.g., happy, sad)

- By defining distinct (muscular) actions of the face and combinations of these (e.g., facial action units like raised eye brows or depressed lip corners)

- By locating expressions in an continuous, multi-dimensional space (e.g., in the two dimensions valence and arousal)

These different approaches are also related to each other. For example, emotion categories can usually be described by one or a set of (combinations of) facial action units, and one can usually identify clusters in the multi-dimensional models which each can be related to a certain emotion category.

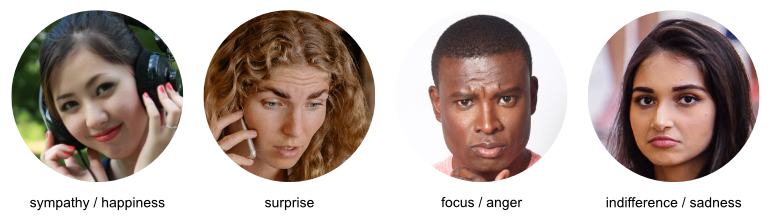

On the TAWNY Platform, we currently try to recognize the presence of four different emotion categories and also try to locate the expression in the dimensions valence and arousal. The four emotion categories on the TAWNY Platform are:

- sympathy / happiness

- surprise

- focus / anger

- indifference / sadness

TAWNY's emotion categories

(photos from Pexels (opens new window))

If you are not completely new to this topic, you might recognize these four categories as four of the basic emotions as defined by researcher Paul Ekman (opens new window). He actually defined six basic emotions, namely: happy, surprised, angry, sad, disgusted, fearful (and additionally neutral).

You might wonder: Why does TAWNY have different naming then and why do we offer only four of these emotions? Let's start with the naming: As you can see, we carried over the original terms but added some additional ones. One reason is that - as we've already described - although these terms actually convey some meaning, we are still looking at proxies (i.e., the facial expression) for the actual feeling. Many of our users tended to take the terms to literally which made it harder for them to draw the right conclusions. By adding more terms, we want to clarify that the actual interpretation of the facial expression might vary - e.g., depending on the context. So taking the category "sympathy / happiness" as an example, it means that expressions from that category usually occur when the observed person reacts sympathetically towards the stimulus or the situation had made them happy or what happended has created a positive feeling in the person.

This general idea is especially important because the original category names of Ekman sound rather intense, whereas such intense feelings like anger usually do not occur when, e.g., watching a TV spot or testing an application - except when the user experience is really really bad. However, certain (parts of) facial expressions that are categorized as angry typically occur when a person focuses or concentrates on something. The obvious facial action unit here is the frown, i.e., pulling the eyebrows together. If a user interface leads to the user becoming very focused or concentrated, it might be because it is hard to understand. So that's a very subtle form of that angry category, but it is very relevant, e.g., for UX testing.

So basically, by using these more diverse terms, we want to make clear that there are some more nuances within each category, and especially the more subtle examples - as depicted in the next image - are very relevant for many use cases of emotion analytics.

TAWNY's emotion categories - subtle examples

(photos from Pexels (opens new window))

The reason for focusing on these four emotion categories is that we found them to be the most relevant and interpretable categories in typical use cases from fields such as market research, UX testing, behavior research, etc. While our selection is highly influenced by practical experience, there also is a considerable body of research work proposing different sets of culturally universal basic emotions. A relatively recent attempt at revealing and explaining universal facial expressions of emotion comes from researcher Rachael E. Jack, suggesting that there might actually be four (and not six) latent expressive patterns of facial expressions (opens new window). These four latent expressive patterns correspond to our selection of emotion categories.

To sum it up, here are the descriptions of the four categories:

Sympathy / Happiness. Facial expressions which are related to the person reacting positively towards the stimulus; can be described as sympathy towards what has been seen or experienced; usually means that the stimulus induced a state of happiness; with regard to action units, the relevant expressions usually contain a smile, from subtle to intense.

Surprise. Expressions which are related to the person being surprised by the stimulus; usually means that something happened that was not expected by the person; can also mean that something did not behave in the way that the person expected it; surprises can be positive or negative; an (intense) negative surprise might be regarded as fear; with regard to action units, the relevant expressions usually contain raised eyebrows and optionally a lowered jaw.

Focus / Anger. Expressions which are related to the person getting angry or confused because of the stimulus; In typical use cases, this means that the person does or even is forced to focus/concentrate on the stimulus; can be caused by the person not liking or also not understanding the stimulus; in user experiences it can also mean that the task is more demanding; can also mean that the stimulus really draws the attention of the person, which can be interpreted positively if that is what you want to achieve; with regard to action units, the relevant expressions usually contain frowning and optionally pressed lips / clenched teeth.

Indifference / Sadness. Expressions which are related to the person showing signs of indifference, disappointment or even sadness; Often this means that the stimulus did not behave as desired or a certain action did not lead to the intended outcome; In test settings which are not very exciting (maybe even boring), people tend show these expressions also when nothing in particular happens, which means that here it is especially important to look for significant changes; with regard to action units, the relevant expressions usually contain lowered lip corners.

# Valence / Arousal

Locating emotions in the two-dimensional Valence/Arousal (V/A) space is another scientifically established way of describing emotional states.

The dimension called valence thereby describes how negative or positive a certain state is.

It is a continuous scale from -1.0 (very unpleasant/negative) to +1.0 (very pleasant/positive).

Valence is mostly estimated based on the facial expression of the subject, so positive valence often correlates with more frequent occurences of sympathy/happiness events while negative valence has a link to the indifference/sadness category.

Arousal is the second component of the V/A space.

It describes the level of activation of the subject, from -1.0 (very inactive / powerless) to +1.0 (very active / excited).

You might wonder why someone can be negatively aroused.

Shouldn't a very calm person just have zero arousal?

A more intuitive way for thinking about the arousal dimension is to relate it to the subject's willingness to act.

So a neutral arousal value (~0.0) signifies that the person is calm but is in a state that would allow them to raise to a higher activation (i.e., the person is awake and somehow attentive).

A positive arousal value means that the person already is somehow excited and might be on the verge to act.

On the other hand, a negative arousal value signifies that the person is in a state in which they have a lack of energy, preventing them from immediate action, e.g., because they are tired or because they are demotivated/depressed.

TAWNY's arousal estimation is partly based on analyzing the subject's facial expressions, but it also takes into account patterns of movement and even an estimation of the subject's current heartrate. The latter is done using a technique called remote photoplethysmography, which is just a fancy word for "we are analyzing the subtle changes of the subject's skin color which correlate with their heartbeats". The approach is similar to how most smart watches and other wrist-worn wearables read your heart rate. Consequently, this isn't any kind of medical-grade heart rate measurement, but it allows us to infer a general level of arousal - exactly what we need. Making this work with ordinary webcams involves a lot of signal processing magic, but that's what TAWNY takes care of.

# Scales

So we've seen that the TAWNY emotion analytics estimates the subject's state in four different emotion categories and also the two dimensions valence and arousal.

These estimations are given in numeric values, between 0.0 and 1.0 for the emotion categories and between -1.0 and +1.0 for valence and arousal.

But what do these numbers actually mean?

Here we have to make an important distinction.

The values given for the emotion categories describe how sure our model is that at the given moment a certain expression pertaining to the respective category occurred.

As an example: If at a certain point in time, our system returns 0.8 sympathy/happiness, this simply means that it is very likely that there actually was an expression which belongs to that category (probably a smile in this case).

However, the value does not describe the intensity of the expression.

For example, it can be the case that very intense laughing can sometimes look like an angry shouting expression.

Our system in general tries to draw conclusions also from what has happened before and after a certain point in time, but nevertheless there can be situations in which it is just not possible to be sure what an expression actually is.

That would be a typical case in which you might see 0.45 sympathy / happiness combined with 0.35 focus / anger and 0.2 surprise.

On the other hand, a subtle smile but without any doubts might give you 0.9 sympathy / happiness.

We'll go into more detail how to best make use of these values in another article, but as a first suggestion: Often it makes sense to count the number of peaks above a certain threshold (e.g., 0.8) to estimate the number of clear occurences of a certain category.

In contrast to the values given for the emotion categories, the values predicted for valence and arousal actually describe the direction and the intensity in the respective dimension.

That means, that 0.7 valence is more positive than 0.2 valence.

Simple as that.

# Conclusion

As we've said in the beginning: Human emotions are a complex topic. The goal of the TAWNY Emotion Analytics Platform is not to deny this complexity but it is to help you make use of the vast potential of emotion analytics despite this complexity. We've seen great results from our clients, generating valuable insights into how people perceive products and services. And we're sure that this can work for you, too. If you need help on your journey, don't hesitate to drop us a line.